Apple Intelligence Expands Reach, Opens Doors for Developers

Apple today unveiled a significant leap forward for Apple Intelligence at WWDC25, introducing a suite of powerful new capabilities across its device ecosystem and, crucially, opening its on-device foundation model to developers. This move promises to usher in a new era of private, intelligent experiences directly within third-party applications.

Apple Intelligence: Key Enhancements and What’s Changed This Time Around

Previously, Apple Intelligence focused on core system-level integrations. This latest iteration significantly expands its reach and functionality in several key areas:

Developer Access to On-Device Foundation Model

This is arguably the most impactful change. For the first time, developers can directly access Apple Intelligence’s on-device large language model. This enables them to build powerful, fast, and privacy-preserving intelligent features into their apps, even when users are offline, without incurring cloud API costs. This opens up a vast new landscape for innovation, from personalised quizzes in education apps to offline natural language searches in outdoor guides.

Live Translation Breaks Down Language Barriers

Apple Intelligence now integrates Live Translation directly into Messages, FaceTime, and Phone. This on-device capability allows for real-time translation of messages as they are typed, live captions during FaceTime calls, and spoken translations during phone conversations, all while ensuring user privacy.

Expanded Creativity with Genmoji and Image Playground

Genmoji and Image Playground receive significant upgrades. Users can now mix and combine existing emoji with text descriptions to create new Genmoji. Image Playground introduces new styles, including “Any Style” with ChatGPT integration, allowing users to describe their desired image for unique creations, with strict user control over data sharing.

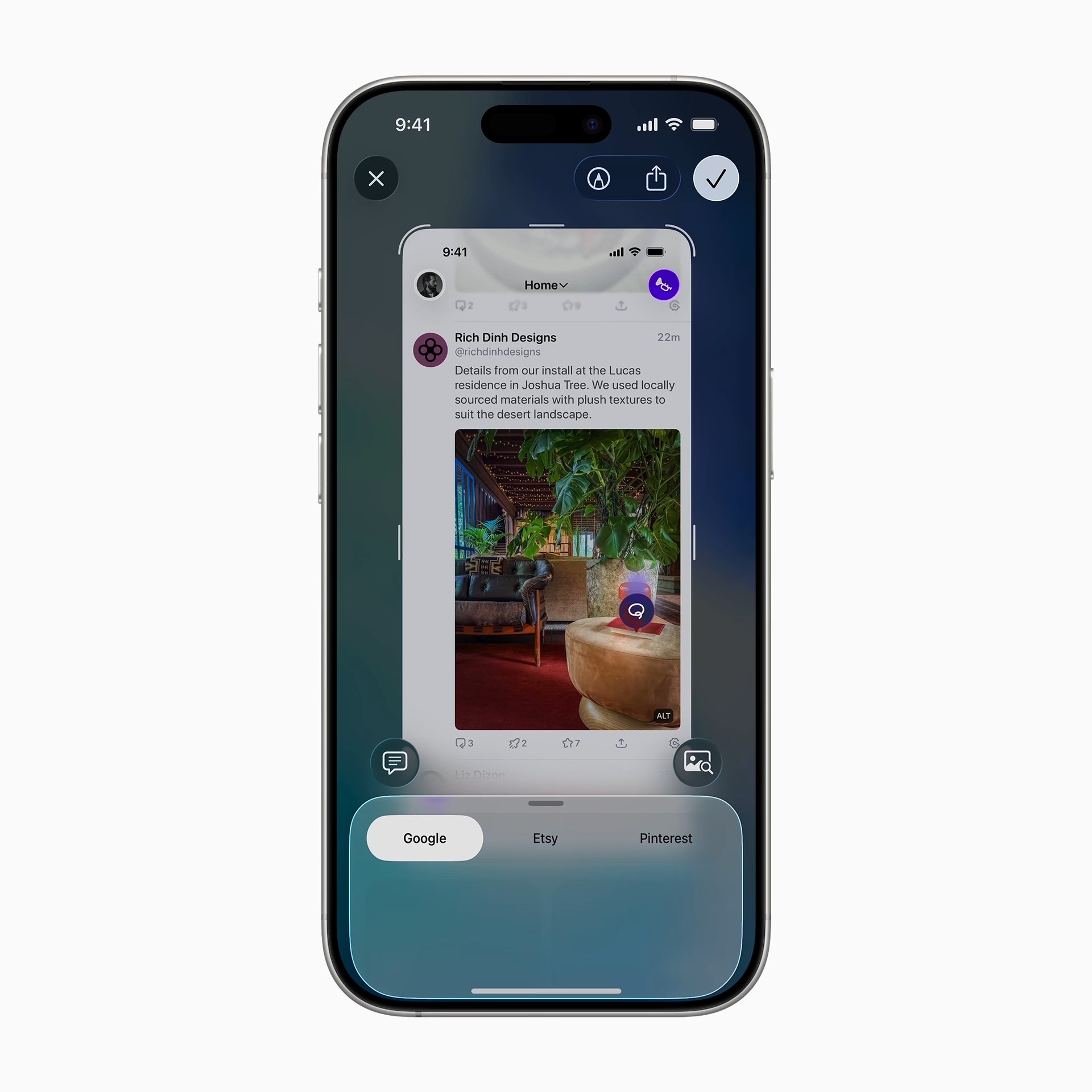

Enhanced Visual Intelligence for On-Screen Actions

Building on existing visual intelligence, the system now extends to a user’s iPhone screen. Users can ask ChatGPT questions about what they’re viewing, search for similar items on platforms like Google and Etsy, or highlight specific objects for detailed searches. It also intelligently recognizes events on screen and suggests adding them to the calendar, pre-populating details.

Apple Intelligence Comes to Apple Watch with Workout Buddy

A new “Workout Buddy” feature on Apple Watch leverages Apple Intelligence to provide personalised, motivational insights during workouts. It analyses current workout data and fitness history to deliver dynamic generative voice feedback tailored to the user, processed privately and securely on-device.

Supercharged Shortcuts

Shortcuts are now more intelligent, with “intelligent actions” powered by Apple Intelligence. Users can directly tap into on-device models or Private Cloud Compute for richer responses within their shortcuts, maintaining privacy. Integration with ChatGPT is also an option for generating responses.

Deeper System Integration and Quality-of-Life Improvements

Apple Intelligence is now even more deeply embedded across the OS. This includes automatic categorisation of relevant actions in Reminders from emails or websites, summarisation of order tracking details in Apple Wallet, and intelligent poll suggestions and unique background creation in Messages. Existing features like Writing Tools, Clean Up in Photos, Smart Reply, and natural language search in Photos also continue to evolve with enhanced intelligence.

Broader Language Support

Apple Intelligence is expanding its language support, with eight more languages coming by the end of the year, including Danish, Dutch, Norwegian, Portuguese (Portugal), Swedish, Turkish, Chinese (traditional), and Vietnamese.

A Continued Commitment to Privacy

Apple reiterated its unwavering commitment to privacy with Apple Intelligence. Significantly, many of the models run entirely on-device. However, for requests requiring larger models, Private Cloud Compute extends the iPhone’s privacy and security to the cloud. Crucially, Apple emphasises that user data is never stored or shared and is used solely to fulfil requests. Furthermore, independent experts are able to inspect the code to verify these privacy promises.

These new Apple Intelligence features are available for developer testing starting today, with a public beta arriving next month. They will be available to users with supported devices (including all iPhone 16 models, iPhone 15 Pro, iPhone 15 Pro Max, iPad mini (A17 Pro), and iPad and Mac models with M1 and later) this fall, with Siri and device language set to a supported language.